OCaml Planet

The OCaml Planet aggregates various blogs from the OCaml community. If you would like to be added, read the Planet syndication HOWTO.

Our Experience at Tarides: Projects From Our Internships in 2023 — Tarides, Sep 15, 2023

Our Experience at Tarides: Projects From Our Internships in 2023 — Tarides, Sep 15, 2023

Internships at Tarides

We regularly have the pleasure of hosting internships where we work with engineers from all over the world on a diverse range of projects. By collaborating with people who are relatively new to the OCaml ecosystem, we get to benefit from their perspective. Seeing things with fresh eyes helps with identifying holes in documentation, gaps in workflows, as well as other ways to improve user experience.

In turn, we offer interns the opportunity to work on a project in OCaml in…

Read more...Internships at Tarides

We regularly have the pleasure of hosting internships where we work with engineers from all over the world on a diverse range of projects. By collaborating with people who are relatively new to the OCaml ecosystem, we get to benefit from their perspective. Seeing things with fresh eyes helps with identifying holes in documentation, gaps in workflows, as well as other ways to improve user experience.

In turn, we offer interns the opportunity to work on a project in OCaml in close collaboration with a mentor. This affords participants a great deal of independence, while still having the support and expertise of an experienced engineer at their disposal. During the course of their internship, participants will learn more about OCaml and strengthen their skills in functional programming. They will also have the chance to complete a project with real-world implications, contributing meaningfully to an open-source ecosystem.

Does this sound like something you would like to do? Appplications for our next round of internships open early next year, and you will be able to apply on our website around that time.

Let's check out some reports from this summer's internships, and see what the teams got up to!

Dipesh: Par_incr - A Library for Incremental Computation With Support for Parallelism

Background

I am a final year CS student from NIT Trichy. I had tried to learn Haskell in my second year but didn't really succeed. I enjoy learning about languages and their features, however, so I had learnt some OCaml by the end of my third year but not tried out any fancy features.

I found out about the internship from X (Twitter) in one of KC's tweets, but I knew about Tarides and the good work they do since I had worked with KC in the past. I messaged him to check the rules and ask if recent graduates could apply. He confirmed that they could and encouraged me to apply.

The interview itself was very pleasant; it was as if it was just me talking and discussing things with interviewers (all interviews ever should be like this!). I thought I wouldn't get it but thankfully I did.

Goal of the Project

The goal of my project was to build an incremental library with support for parallelism constructs using OCaml 5.0. Incremental computation is a software feature which attempts to optimise efficiency by only recomputing outputs that depend on changed data. The library we built, Par_incr, takes advantage of the new parallelism features in OCaml 5.0 to create an even more efficent incremental computation library.

Journey

I was somewhat familiar with OCaml so I brushed up on some concepts using the Real World OCaml textbook. OCaml.org also has a lot of resources for learning OCaml aimed at programmers of any level(beginner to advanced). For any non-trivial doubts, I would just ask my amazing mentor (Vesa) or someone else at Tarides (you can always find someone who's an expert in whatever question you have relating to OCaml) for help.

Initially, we wanted to finalise the module signature for the library. Vesa suggested a Monadic interface for the library, and it felt like the right choice.

After that was done, I started on the implementation and got something working. We wanted to check how it fared against existing libraries, so I wrote benchmarks comparing the library to current_incr and incremental.

I remember one particular bug on which I wasted almost 2 full days. I had something like this in the code:

if not is_same then t.value <- x;

Reader_list.iter readers Rsp.RNode.mark_dirtywhich should've actually been like this:

if not is_same then (t.value <- x;

Reader_list.iter readers Rsp.RNode.mark_dirty)This caused a huge performance hit because it would cause a lot of unnecessary work. You can learn more about the library from here.

Debugging this was quite fun and frustrating. It didn't even occur to me that this part could be the problem, so I was banging my head against the wall thinking I did something wrong somewhere else. I was trying out different things, but thankfully making changes to the code was enjoyable because the typechecker was always there holding my hand.

Overall it was an amazing journey. Getting to work in such an amazing environment here was a blessing for me, and I'm very grateful to have gotten this opportunity. I learnt a lot from Vesa throughout the internship and from many amazing folks at Tarides.

Challenges

The biggest challenge was to make the library performant. Since OCaml is a language with a garbage collector, you have to take special care when allocating things, since allocation isn't cheap. Another difficulty was trying to find things relating to compiler internals, so how certain things get compiled when certain optimisations kick in, etc. This is something that can be improved, but I get that it's quite difficult to keep track of documentation of large open-source compiler codebases that keep changing.

Takeaways and Best Parts

The best part was learning about optimisations, profiling, benchmarking, and improving performance, looking into assembly trying to figure out whether some things got inlined, as well as my discussions with Vesa.

The discussions with Vesa made me want to explore Emacs more, and his advice will definitely help me throughout my career. I'm also much more confident in OCaml and will probably use it whenever possible. I got to learn about all sorts of cool things being done by the Multicore team and other Tarides folks.

Shreyas: Olinkcheck

Background

I'm a final year CS student from NIT Trichy. I had never been exposed to functional programming before, but I had heard cool things about Haskell and OCaml and how Rust features were inspired by these languages. I also followed KC on Twitter from before, when I had been researching internships and professors whose work I found interesting.

When KC tweeted about openings for interns at Tarides, I opened the application doc to read about all the cool projects listed, but I didn't know any functional programming. I still applied anyways, thinking that the worst that could happen is I get rejected, no big deal.

Fast forward to a really fun interview. (No Data Structures and Algorithms? Yay! Easily my favorite interview experience so far.) It was more of a discussion than a question-and-answer.

Goal of the Project

The goal of my project was to create a tool that could be used to check for broken HTTP links, as well as present the broken link information to the user. The tool would then be integrated into OCaml.org through GitHub, to check for broken links on the website. Since OCaml.org is such a large website with lots of content, it is difficult to manually keep up with all the links. However, broken links negatively impact the user experience, and may also make pages on the website less visible to people who would otherwise be able to find the information they need.

Journey

Learning OCaml

I used these resources to learn OCaml:

- From the book 'Real World OCaml'

- From ocaml.org/learn

- By reading others' code

- Writing something and changing it until the compiler stops complaining

- UTop

- Stackoverflow

- Setting up a developer environment (I was convinced by friends at college that 'real programmers' use Vim / Emacs on Arch Linux)

Categories of Programmers and Categories in Programming

I spent some time going through library code to figure out how to actually use it. I could hack something together to work for Markdown files, and I slowly learned how to write more idiomatic OCaml (thanks to my mentor Cuihtlauac). As an imperative programmer, I was used to giving names to intermediate things, which wasn't really necessary with OCaml.

I learnt a bit about Lwt and came across the term Monad, which is, of course, as is widely known - a monoid in the category of endofunctors. (Thankfully there were much better explanations and documentation online).

Everything was going fine - I was slowly iterating on the code, making it incrementally better and adding more tests, until the first major rewrite. I was using an outdated version of a library!

That wasn't too painful, I knew what parsing code looked like already - but the structure of the document was now different.

Another library (hyper) had unfixed issues for over a year, so I swapped that out too.

I went back to my old habit of writing imperative OCaml (!) using refs. They have their place, but can be avoided when it's possible. But this was important - it helped me really imbibe the idea that functions are first class, what functional code looks like, and how I can start thinking like a functional programmer. The humble looking List.fold_left was the key to my enlightenment.

Or so I thought. I hadn't met functors yet. It is, after all, just a mapping between two categories. (No, please.) Again, Cuiht really broke it down to a point where I could start understanding what a functor in OCaml is, which eventually led me to discover the power of the OCaml module system.

Seeing it Work

After some "hacky" fixes and regular expression magic (resulting from a lot of discussions with Sabine, because I thought I hit a fundamental roadblock here and thought it might be very hard to do the project (!)), I could get it to run as a GitHub CI action, which lead to an automated pull request. I could also integrate it into Voodoo, the package documentation generator, and it is now being tested in the staging pipeline.

I've Had it All Wrong From the Beginning

By this time I had read a lot of other people's code and learnt enough from Cuiht to realise, yet again, that my code was bad. The functional programmer doesn't rely on the name of the function (what does the function v do? Or pp?). The meaning is taken from the context and the signature. So I had functions that looked like

val do_this_thing : a -> b -> c -> d -> ...with no clue as to what those arguments mean. Someone reading the code would be forced to look into the source code to understand what that means. Now my target was to have a decent looking interface when someone said #show Olinkcheck;; on utop. That's how I used other libraries, so I wanted others to be able to use mine like that too.

Biggest Challenge

My project was a practical problem, as opposed to a theoretical one like a data structure. So the challenges were also practical. Not everyone follows the same formatting while writing text-based files (let's first agree on tabs vs spaces?), and not all parsers are perfect. In the ideal world I could manipulate a syntax tree data structure which turns back into a string with the original formatting, webservers wouldn't care how many links I request from them, and there would be well defined regular expressions to find URLs amongst other text, but alas, no. None of these things are true. Text based data is convenient because of the loose requirements. Webservers can't realistically be fine with a user asking it for 7000+ links in a short time.

The Best Part

The best part for me was easily the opportunity to learn from people who are much more experienced than I am and to see something written by me be actually used in the real world.

Adithya: Domain-Safe Data Structures for Multicore OCaml

Background

I am a final year CS student at NITK Surathkal. Before this internship, I had only done a little bit of functional programming in Scala, so programming in OCaml was something very new to me. However, I was pretty excited to work on this because OCaml had only recently got Multicore support, and it was a niche area to explore.

I got to know about the internship from one of KC's tweets, and how I got to know about KC and the work he does is a pretty random incident where I needed his help to contact another professor to discuss some of my previous research internship work in a related area.

The interview experience was amongst the best ones I've had, very open ended discussions and friendly interviewers.

Goal of the Project

I was a part of the Multicore applications team and was mentored by Carine. The goal of my project was to add lock-based data structures to the Saturn library that maintains parallelism-safe data structures for Multicore OCaml.

The first step was to create a bounded queue, which is based on a Michael Scott queue. This type of queue has two locks, one for the head and one for the tail node. I also investigated fine-grained versus coarse-grained lists, double-linked lists, and finally a lock-free priority queue which was implemented on top of a lock-free skiplist.

Towards the later part of the internship, I also worked on lock-free data structures.

Journey

Initially, I started off slow since I was just getting familiar with the OCaml environment and language features. My main 2 resources to learn Ocaml was Real World OCaml and OCaml.org. Other than this, I spent a significant amount of time going through the book called The Art of Multiprocessor Programming, since that was the main reference point for my project. I also had to dive into some research papers cited in the book to get a better understanding of the implementation and some nitty-gritty details.

Over the course of the internship, I gained a lot of insights about minor details while programming for multicore systems, as well as OCaml language features that can have a significant impact on performance. Something that never struck me before was how much worse using structural equality (=) instead of physical equality (==) could be depending on the scenario.

Since I was interning on-site at the Paris office, it was very easy for me to clarify any doubts or difficulties I faced whenever required, as most people at Tarides have a very high level of expertise in OCaml and are really helpful. I often had to rewrite many functions or make major changes, but thanks to OCaml features such as static checking and type inference, it was pretty easy and relatively quick to make those modifications.

Challenges

The biggest challenge was debugging and reasoning about performance of one implementation over the other. Since I was writing parallel programs, debugging was difficult because of the many edge case scenarios that are hard to detect and can lead to deadlocks or errors in output. I remember spending an entire day sometimes finding the bug, but in the end it was really satisfying to fix it. Comparing different implementations and trying to find if any possible optimisations can be done was quite interesting and challenging.

The Best Part

Compared to my previous internships, Tarides was a unique experience since it is a pretty small company with a great culture working on some niche areas. There aren't many other places doing this kind of work. So if someone is interested in computer systems and programming languages, I would definitely recommend them to intern here. Getting the opportunity to work from the Paris office and visit Europe was definitely an unexpected yet pleasant surprise.

Want to Strengthen Your OCaml Skills?

If you're looking to learn more about functional programming in a supportive environment, you sound like an excellent candidate for our next round of internships! The next round is coming up early next year and we would be delighted if you would apply! Keep an eye on our website for more information or contact us here.

Hide

Beyond TypeScript: Differences Between Typed Languages — Ahrefs, Sep 14, 2023

Beyond TypeScript: Differences Between Typed Languages — Ahrefs, Sep 14, 2023

For the past six years, I have been working with OCaml, most of this time has been spent writing code at Ahrefs to process a lot of data and show it to users in a way that makes sense.

OCaml is a language designed with types in mind. It took me some time to learn the language, its syntax, and semantics, but once I did, I noticed a significant difference in the way I would write code and colaborate with others.

Maintaining codebases became much easier, regardless of their size. And day-to-day wor…

Read more...For the past six years, I have been working with OCaml, most of this time has been spent writing code at Ahrefs to process a lot of data and show it to users in a way that makes sense.

OCaml is a language designed with types in mind. It took me some time to learn the language, its syntax, and semantics, but once I did, I noticed a significant difference in the way I would write code and colaborate with others.

Maintaining codebases became much easier, regardless of their size. And day-to-day work felt more like having a super pro sidekick that helped me identify issues in the code as I refactored it. This was a very different feeling from what I had experienced with TypeScript and Flow.

Most of the differences, especially those related to the type system, are quite subtle. Therefore, it is not easy to explain them without experiencing them firsthand while working with a real-world codebase.

However, in this post, I will attempt to compare some of the things you can do in OCaml, and explain them from the perspective of a TypeScript developer.

Before every snippet of code, we will provide links like this: (try). These links will go either to the TypeScript playground for TypeScript snippets, or to the Melange playground, for OCaml snippets. Melange is a backend for the OCaml compiler that emits JavaScript.

Without further ado, let’s go!

Photo by Bernice Tong on Unsplash

Syntax

OCaml’s syntax is very minimal (and, in my opinion, quite nice once you get used to it), but it is also quite different from the syntax in mainstream languages like JavaScript, C, or Java.

Here is a simple snippet of code in OCaml syntax (try):

let rec range a b =

if a > b then []

else a :: range (a + 1) b

let my_range = range 0 10

OCaml is built on a mathematical foundation called lambda calculus. In lambda calculus, function definitions and applications don’t use parentheses. So it was natural to design OCaml with similar syntax to that of lambda calculus.

However, the syntax might be too foreign for someone used to JavaScript. Luckily, there is a way to write OCaml programs using a different syntax which is much closer to the JavaScript one. This syntax is called Reason syntax, and it will make it much easier to get started with OCaml if you are familiar with JavaScript.

Let’s translate the example above into Reason syntax (you can translate any OCaml program to Reason syntax from the playground!):

let rec range = (a, b) =>

if (a > b) {

[];

} else {

[a, ...range(a + 1, b)];

};

let myRange = range(0, 10);

This syntax is fully supported throughout the entire OCaml ecosystem, and you can use it to build:

- native applications if you need fast startups or high speed of execution

- or compile to JavaScript if you need to run your application in the browser.

To use Reason syntax, you just need to name your source file with the .re extension instead of.ml, and you're good to go.

Since Reason syntax is widely supported and is closer to TypeScript than OCaml syntax, we will use Reason syntax for all code snippets throughout the rest of the article. Although understanding OCaml syntax has some advantages, such as allowing us to understand a larger body of source code, blog posts, and tutorials, there is absolutely no rush to do so, and you can always learn it at any time in the future. If you’re curious, we’ll provide links to the Melange playground for every snippet, so you can switch syntaxes to see how a Reason program looks in OCaml syntax, or vice versa.

Data types

OCaml has great support for data types, which are types that allow values to be contained within them. They are sometimes called algebraic data types (ADTs).

One example is tuples, which can be used to represent a point in a 2-dimensional space (try):

type point = (float, float);

let p1: point = (1.2, 4.3);

One difference with TypeScript is that OCaml tuples are their own type, different from lists or arrays, whereas in TypeScript, tuples are a subtype of arrays.

Let’s see this in practice. This is a valid TypeScript program (try):

let tuple: [string, string] = ["foo", "bar"];

let len = (a: string[]) => a.length;

let u = len(tuple)

Note how the len function is annotated to take an array of strings as input, but then we apply it and pass tuple, which has a type [string, string].

In OCaml, this will fail to compile (try):

let tuple: (string, string) = ("foo", "bar");

let len = (a: array(string)) => Array.length(a);

let u = len(tuple)

// ^^^^^

// Error This expression has type (string, string)

// but an expression was expected of type array(string)Another data type is records. Records are similar to tuples, but each “container” in the type is labeled. (try):

type point = {

x: float,

y: float,

};

let p1: point = {x: 1.2, y: 4.3};Records are similar to object types in TypeScript, but there are subtle differences in how the type system works with these types. In TypeScript, object types are structural, which means a function that works over an object type can be applied to another object type as long as they share some properties. Here’s an example ( try):

interface Todo {

title: string;

description: string;

year: number;

}

interface ShorterTodo {

title: string;

description: string;

}

const title = (todo: ShorterTodo) => console.log(todo.title);

const todo: Todo = { title: "foo", description: "bar", year: 2021 }

title(todo)In OCaml, you have a choice. Record types are nominal, so a function that takes a record type can only take values of that type. Let’s look at the same example (try):

type todo = {

title: string,

description: string,

year: int,

};

type shorterTodo = {

title: string,

description: string,

};

let title = (todo: shorterTodo) => Js.log(todo.title);

let todo: todo = {title: "foo", description: "bar", year: 2021};

title(todo);

// ^^^^

// Error This expression has type todo but an expression was expected of

// type shorterTodoBut if we want to use structural types, OCaml objects also offer that option. Here is an example using Js.t object types in Melange (try):

let printTitle = todo => {

Js.log(todo##title);

};

let todo = {"title": "foo", "description": "bar", "year": 2021};

printTitle(todo);

let shorterTodo = {"title": "foo", "description": "bar"};

printTitle(shorterTodo);To conclude the topic of ADTs, one of the most useful tools in the OCaml toolbox are variants, also known as sum types or tagged unions.

The simplest variants are similar to TypeScript enums (try):

type shape =

| Point

| Circle

| Rectangle;

The individual names of the values of a variant are called constructors in OCaml. In the example above, the constructors are Point, Circle, and Rectangle. Constructors in OCaml have a different meaning than the reserved wordconstructor in JavaScript.

Unlike TypeScript enums, OCaml does not require prefixing variant values with the type name. The type inference system will automatically infer them as long as the type is in scope.

This TypeScript code (try):

enum Shape {

Point,

Circle,

Rectangle

}

let shapes = [

Shape.Point,

Shape.Circle,

Shape.Rectangle,

];Can be written like (try):

type shape =

| Point

| Circle

| Rectangle;

let shapes = [Point, Circle, Rectangle];

Another difference is that, unlike TypeScript enums, OCaml variants can hold data for each constructor. Let’s improve the shape type to include more information about each constructor (try):

type point = (float, float);

type shape =

| Point(point)

| Circle(point, float) /* center and radius */

| Rect(point, point); /* lower-left and upper-right corners */

Something like this is possible in TypeScript using discriminated unions ( try):

type Point = { tag: 'Point'; coords: [number, number] };

type Circle = { tag: 'Circle'; center: [number, number]; radius: number };

type Rect = { tag: 'Rect'; lowerLeft: [number, number]; upperRight: [number, number] };

type Shape = Point | Circle | Rect;The TypeScript representation is slightly more verbose than the OCaml one, as we need to use object literals with a tag property to achieve the same effect. On top of that, there are greater advantages of variants that we will see just right next.

Pattern matching

Pattern matching is one of the killer features of OCaml, along with the inference engine (which we will discuss in the next section).

Let’s take the shape type we defined in the previous example. Pattern matching allows us to conditionally act on values of any type in a concise way. For example (try):

type point = (float, float);

type shape =

| Point(point)

| Circle(point, float) /* center and radius */

| Rect(point, point); /* lower-left and upper-right corners */

let area = shape =>

switch (shape) {

| Point(_) => 0.0

| Circle(_, r) => Float.pi *. r ** 2.0

| Rect((x1, y1), (x2, y2)) =>

let w = x2 -. x1;

let h = y2 -. y1;

w *. h;

};

Here is the equivalent code in TypeScript (try):

type Point = { tag: 'Point'; coords: [number, number] };

type Circle = { tag: 'Circle'; center: [number, number]; radius: number };

type Rect = { tag: 'Rect'; lowerLeft: [number, number]; upperRight: [number, number] };

type Shape = Point | Circle | Rect;

const area = (shape: Shape): number => {

switch (shape.tag) {

case 'Point':

return 0.0;

case 'Circle':

return Math.PI * Math.pow(shape.radius, 2);

case 'Rect':

const w = shape.upperRight[0] - shape.lowerLeft[0];

const h = shape.upperRight[1] - shape.lowerLeft[1];

return w * h;

default:

// Ensure exhaustive checking, even though this case should never be reached

const exhaustiveCheck: never = shape;

return exhaustiveCheck;

}

};We can observe how in OCaml, the values inside each constructor can be extracted directly from each branch of the switch statement. On the other hand, in TypeScript, we need to first check the tag, and then access the other properties of the object. Additionally, ensuring coverage of all cases in TypeScript using the never type can be more verbose, and functions may be more error-prone if we forget to handle it. In OCaml, exhaustiveness is ensured when using variants, and covering all cases requires no extra effort.

The best thing about pattern matching is that it can be used for anything: basic types like string or int, records, lists, etc.

Here is another example using pattern matching with lists (try):

let rec sumList = lst =>

switch (lst) {

/* Base case: an empty list has a sum of 0. */

| [] => 0

/* Split the list into head and tail. */

| [head, ...tail] =>

/* Recursively sum the tail of the list. */

head + sumList(tail)

};

let numbers = [1, 2, 3, 4, 5];

let result = sumList(numbers);

let () = Js.log(result);

Type annotations are optional

If we wanted to write some identity function in TypeScript, we would do something like (try):

const id: <T>(val: T) => T = val => val

function useId(id: <T>(val: T) => T) {

return [id(10)]

}

While TypeScript generics are very powerful, they lead to really verbose type annotations. As soon as our functions start taking more parameters, or increasing in complexity, the type signatures length increases accordingly.

Plus, the generic annotations have to be carried over to any other functions that compose with the original ones, making maintenance quite cumbersome in some cases.

In OCaml, the type system is based on unification of types. This differs from TypeScript, and allow to infer types for functions (even with generics) without the need of type annotations.

For example, here is how we would write the above snippet in OCaml (try):

let id = value => value;

let useId = id => [id(10)];

The compiler can infer correctly the type of useId is (int => 'a) => list('a).

With OCaml, type annotations are optional. But we can still add type annotations anywhere optionally, if we think it will be useful for documentation purposes (try):

let id: 'a => 'a = value => value;

let useId: (int => 'a) => list('a) = id => [id(10)];

I can not emphasize enough how the simplification seen above, which only involves a single function, can affect a codebase with hundreds, or thousands of more complex functions in it.

Immutability

JavaScript is a language where mutability is pervasive, and working with immutable data structures often require using third party libraries or other complex solutions.

Trying to obtain real immutable values in TypeScript is quite challenging. Historically, it has been hard to prevent mutation of properties inside objects, which was mitigated with as const.

But still, the way the type system has to be flexible to adapt for the dynamism of JavaScript can lead to “leaks” in immutable values.

Let’s see an example (try):

interface MutableValue<T> {

value: T;

}

interface ImmutableValue<T> {

readonly value: T;

}

const i: ImmutableValue<string> = { value: "hi" };

const m: MutableValue<string> = i;

m.value = "hah";As you can see, even when being strict about defining the immutable nature of the value i using TypeScript expressiveness, it is fairly easy to mutate values of that type if they happen to be passed to a function that expects a type similar in shape, but without the readonly flag.

In OCaml, immutability is the default, and it’s guaranteed. Records are immutable (like tuples, lists, and most basic types), but even if we can define mutable fields in them, something like the previous TypeScript leak is not possible (try):

type immutableValue('a) = {value: 'a}

type mutableValue('a) = {mutable value : 'a}

let i: immutableValue(string) = { value: "hi" };

let m: mutableValue(string) = i;

m.value = "hah";When trying to assign i to m we get an error: This expression has type immutableValue(string) but an expression was expected of type mutableValue(string).

No imports

This might not be as impactful of a feature as the ones we just went through, but it is really nice that in OCaml there is no need to manually import values from other modules.

In TypeScript, to use some function bar defined in a module located in../../foo.ts, we have to write:

import {bar} from "../../foo.ts";

let t = bar();In OCaml, libraries and modules in your project are all available for your program to use, so we would just write:

let t = Foo.bar()

The compiler will figure out how to find the paths to the module.

Currying

Currying is the technique of translating the evaluation of a function that takes multiple arguments into evaluating a sequence of functions, each with a single argument. It is a feature that might be more desirable for those looking into learning more about functional programming techniques.

While it is possible to use currying in TypeScript, but it becomes quite verbose (try):

const mix = (a: string) => (b: string) => b + " " + a;

const beef = mix("soaked in BBQ sauce")("beef");

const carrot = function () {

const f = mix("dip in hummus");

return f("carrot");

}();

In OCaml, all functions are curried by default. This is how a similar code would look like (try):

let mix = (a, b) => b ++ " " ++ a;

let beef = mix("soaked in BBQ sauce", "beef");

let carrot = {

let f = mix("dip in hummus");

f("carrot");

};

Build native apps that run fast

One of the best parts of OCaml is how flexible it is in the amount of places your code can run. Your applications written in OCaml can run natively on multiple devices, with very fast starts, as there is no need to start a virtual machine.

The nice thing is that OCaml does not compromise expressiveness or ergonomics to obtain really fast execution times. As this study shows, the language hits a great balance between verbosity (Y axis) and performance (X axis). It provides features like garbage collection or a powerful type system as we have seen, while producing small, fast binaries.

Write your client and server with the same language

This is not a particular feature of OCaml, as JavaScript has allowed to write applications that run in the server and the client for years. But I want to mention it because with OCaml one can obtain the upsides of sharing the same language across boundaries, together with a precise type system, a fast compiler, and an expressive and consistent functional language.

At Ahrefs, we work with the same language in frontend and backend, including tooling like build system and package manager (we wrote about it here). Having the OCaml compiler know about all our code allows us to support several number of applications and systems with a reasonably sized team, working across different timezones.

I hope you enjoyed the article. If you want to learn more about OCaml as a TypeScript developer I can recommend the Melange documentation site, which has plenty of information about how to get started. This page in particular, Melange for X developers, summarizes some of the things we have discussed, and expanding on others.

If you want to share any feedback or comments, please comment on Twitter, or join the Reason Discord to ask questions or share your progress on any project or idea built with OCaml.

Originally published at https://www.javierchavarri.com.

Beyond TypeScript: Differences Between Typed Languages was originally published in Ahrefs on Medium, where people are continuing the conversation by highlighting and responding to this story.

Hide

What the interns have wrought, 2023 edition — Jane Street, Sep 12, 2023

What the interns have wrought, 2023 edition — Jane Street, Sep 12, 2023

We’re once again at the end of our internship season, and it’s my task to provide a few highlights of what the dev interns accomplished while they were here.

The State of the Art in Functional Programming: Tarides at ICFP 2023 — Tarides, Sep 08, 2023

The State of the Art in Functional Programming: Tarides at ICFP 2023 — Tarides, Sep 08, 2023

ICFP 2023

The 28th ACM Sigplan International Conference on Functional Programming is taking place in Seattle as I’m typing. This is the largest international research conference on functional programming, and this year’s event features fascinating keynotes (including one from OCaml’s very own Anil Madhavapeddy!), deep dives on various topics like compilation and verification, tutorials, networking opportunities, and workshops on several functional programming languages.

Out of this veritab…

Read more...ICFP 2023

The 28th ACM Sigplan International Conference on Functional Programming is taking place in Seattle as I’m typing. This is the largest international research conference on functional programming, and this year’s event features fascinating keynotes (including one from OCaml’s very own Anil Madhavapeddy!), deep dives on various topics like compilation and verification, tutorials, networking opportunities, and workshops on several functional programming languages.

Out of this veritable cornucopia of things to do and see, we’re of course most excited about the OCaml Workshop. The OCaml Users and Developers Workshop brings together a diverse group of experts and enthusiasts, from academia and businesses using OCaml in practice, to present and discuss recent developments in the OCaml ecosystem. This year, that includes presentations on everything from MetaOCaml, to an effects-based I/O in OCaml 5, and a complete OCaml compiler for WebAssembly. You can keep up with the conference on ACM Sigplan’s YouTube channel where talks are being live streamed.

At Tarides, our mission is to bring sustainable and secure software infrastructure to the world, and a powerful way to achieve this is by supporting forums that promote these goals. ICFP fosters the sharing of ideas, research, and implementation of sound functional programming principles, which is why Tarides is proud to be a silver sponsor of this year’s ICFP conference.

Several colleagues from Tarides are participating in the OCaml Workshop presenting their hard work and research on extending the language, type system, and tooling. In this post, I will give you an overview of each presentation from the Tarides team. Check out the OCaml Workshop program if you would like to explore it on your own.

Tarides at ICFP

ICFP Keynote - Programming for the Planet

Anil Madhavapeddy, our partner at the University of Cambridge, held a morning keynote speech on the role of computer systems in analysing complex data from around the globe to aid conservation efforts. Anil argues that using functional programming can lead to systems that are more resilient, predictable, and reproducible. In his presentation, he outlines the benefits of using functional programming in planetary science, and how the cross-disciplinary research his team is doing is having a tangible impact on conservation projects.

For more information on how Anil is using functional programming to help the planet, you can visit the Cambridge Centre for Carbon Credits’s website. To understand how OCaml and SpaceOS will become the new global standard for satellites, you can read our blog post on SpaceOS.

Eio 1.0 - Effects Based I/O for OCaml 5

This talk introduces the concurrency library Eio and the main features of the 1.0 release. After the release of OCaml 5, which brought support for effects and Multicore, there was demand for a new I/O library in OCaml that would unify the community around a single I/O API as well as introduce new modern features to OCaml’s I/O support.

The presentation outlines how Eio is structured, including how it uses effects so that operations don’t block the whole domain, and also highlights significant new features including modularity, integrations, and tracing. If you’re curious to know more about OCaml’s new concurrency library, check out the presentation on Eio 1.0 on Saturday the 9th of September.

Tutorial - Porting Lwt Applications to OCaml 5 and Eio

Thomas Leonard and Jon Ludlam present a tutorial on porting Lwt applications to OCaml 5 and Eio. The tutorial shows users how to incrementally convert an existing Lwt application to Eio using theLwt_eio compatibility package. Doing so will usually result in simpler code, better diagnostics, and better performance.

If you can’t attend the tutorial at ICFP, you can check out the instructions on GitHub and follow the steps. Please let us know how well the tutorial works for you, and if you have any questions don’t hesitate to ask!

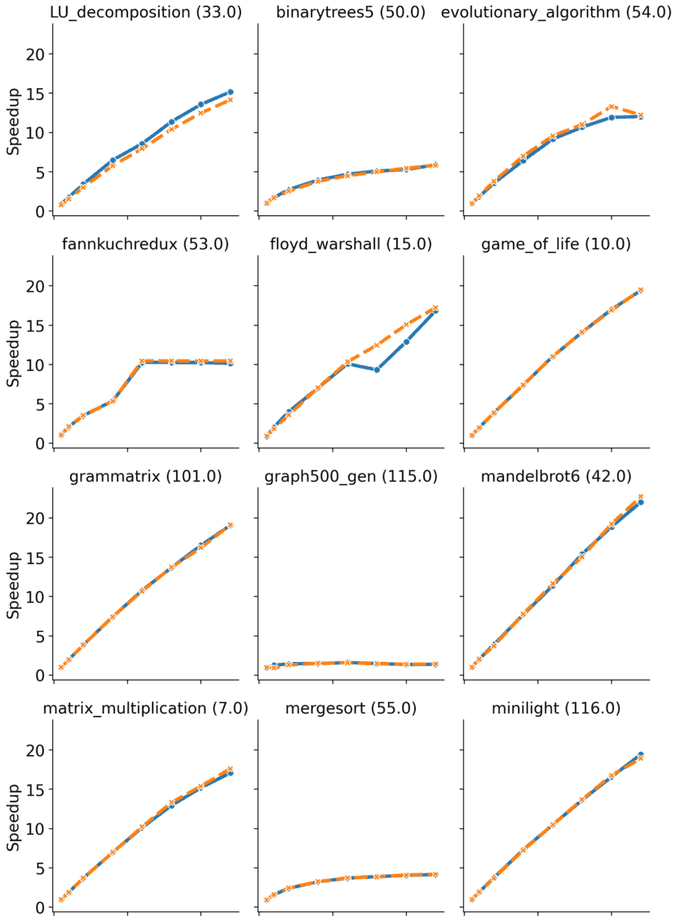

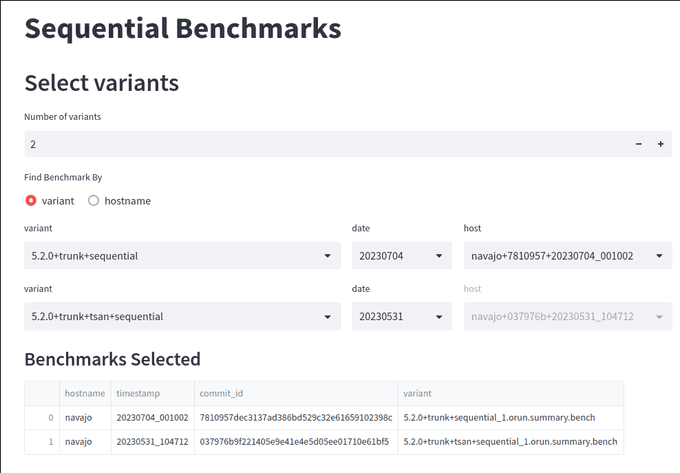

Runtime Detection of Data Races in OCaml with ThreadSanitizer

This presentation from Olivier Nicole and Fabrice Buoro focuses on ThreadSanitizer (TSan) and its ability to detect data races at runtime. With the new possibilities that parallel programming in OCaml brings, it also results in new kinds of bugs. Amongst these bugs, data races present a real danger as they are difficult to detect and can lead to very unexpected results.

That’s where TSan comes in! TSan is an open source library and program instrumentation pass to reliably detect data races at runtime. The presentation covers example usages of TSan, a look into how it works, interesting insights like challenges and limitations of the project, as well as related work including static and runtime detection. There will also be a demo of how to use it in your own code. If you want to know more, have a look at the talk on TSan at ICFP.

Building a Lock-Free STM for OCaml

This talk describes the process by which the kcas library, first developed to provide a primitive atomic lock-free multi-word compare-and-set operation, was recently turned into a proper lock-free software transactional memory implementation. By using transactional memory as an abstraction, Kcas offers developers both a relatively familiar programming model and composability.

The presentation details how Kcas composes transactions, its use cases and any trade offs, as well as the process behind how its design has evolved to its current state. Discover the full details by listening to the talk on Kcas, taking place on Saturday the 9th at the OCaml Workshop.

State of the OCaml Platform in 2023

The final presentation of the workshop provides an update on the OCaml Platform, including progress over the past few years and a roadmap for future work. The OCaml Platform has grown from one tool, opam, to a complete toolchain of reliable tools for OCaml developers.

The talk covers the main milestones of the past three years, including the release of odoc and the widespread adoption of Dune, before looking at the goals for the future which include seamless editor integration and filling in gaps in the OCaml development workflows. Be sure to check out the presentation on the OCaml Platform for more context and information.

We’d Love to Hear from You!

If you’re at ICFP please come and say hi, we’d love to chat about everything OCaml with you! The OCaml Workshop is located in the Grand Crescent, and the tutorial on Eio is at St Helens. The talks are available on ACM Sigplan’s youtube channel for remote viewing.

You can always tweet at us, or chat with the larger OCaml community on Discuss. Look out for more content on Tarides.com coming your way soon and sign up to our newsletter for up to date content - until next time!

Hide

Release of Frama-Clang 0.0.14 — Frama-C, Sep 07, 2023

Release of Frama-Clang 0.0.14 — Frama-C, Sep 07, 2023

Oxidizing OCaml: Data Race Freedom — Jane Street, Sep 01, 2023

Oxidizing OCaml: Data Race Freedom — Jane Street, Sep 01, 2023

OCaml with Jane Street extensions is available from our public opam repo. Only a slice of the features described in this series are currently implemented.

Your Programming Language and its Impact on the Cybersecurity of Your Application — Tarides, Aug 17, 2023

Your Programming Language and its Impact on the Cybersecurity of Your Application — Tarides, Aug 17, 2023

Did you know that the programming language you use can have a huge impact on the cybersecurity of your applications?

In a 2022 meeting of the Cybersecurity Advisory Committee, the Cybersecurity and Infrastructure Security Agency’s Senior Technical Advisor Bob Lord commented that: “About two-thirds of the vulnerabilities that we see year after year, decade after decade” are related to memory management issues.

Memory Unsafe Languages

One can argue that cyber vulnerabilities are simply a fac…

Read more...Did you know that the programming language you use can have a huge impact on the cybersecurity of your applications?

In a 2022 meeting of the Cybersecurity Advisory Committee, the Cybersecurity and Infrastructure Security Agency’s Senior Technical Advisor Bob Lord commented that: “About two-thirds of the vulnerabilities that we see year after year, decade after decade” are related to memory management issues.

Memory Unsafe Languages

One can argue that cyber vulnerabilities are simply a fact of life in the modern online world, which is why every application needs robust cyber security protections (applications, libraries, middleware, operating systems, tools, etc.). While this argument is not technically incorrect, there are still significant differences in the intrinsic security levels of different programming languages.

Computing devices today have access to huge amounts of memory in order to store, process, and retrieve information. Programming languages are used to describe the operations that a device needs to perform. The computer then interprets these operations to access and manipulate memory (of course, programming languages do many other things as well).

Among the various language paradigms, there are some widely used ones such as C and C++ that allow the developer to directly manipulate hardware memory. However, when a programmer writes code using these languages, it could result in attackers gaining access to hardware, stealing data, denying access to the user, and performing other malicious activities. Hence, these programming languages are termed as “memory-unsafe” languages.

Impact of Memory Exploits

Around 60-70% of cyber attacks (attacks on applications, the operating system, etc.) are due to the use of these memory-unsafe programming languages.

This remains true for any computing platform. Memory issues represented around 65% of critical security risks in the Chrome browser and Android operating system. Similarly, memory unsafety issues also represented around 65% of total reported issues for the Linux kernel in 2019. The Chromium web browser project has also reported that 70% of high-severity security bugs were related to memory safety. In iOS 12, 66.3% of vulnerabilities were related to handling memory.

The Solution: Memory Safety

All this begs the question: is there a solution that can eliminate risks that exist due to a programming language’s design, or is the only solution to use several layers of cybersecurity protection (code hardening, firewalls, etc.)?

Many cybersecurity and technology experts recommend using a “memory-safe” programming language, where a number of validation checks are performed during the translation from the human-readable programming language to the format that the machine reads. Many such programming languages exist, giving the developers several choices, for example: Go, Java, Ruby, Swift, and OCaml are all memory safe.

Does this mean that memory-safe languages are protected from all cyber attacks? No, but 60-70% of attacks are by design not permitted by the language. That is why most memory safe languages also offer crypto libraries, formal verification, and more in order to ensure the best possible cyber protection in addition to the strong protection the language itself provides. Of course, you also need to follow industry best practices for physical security, access controls, firewalls, data protection techniques, and other defence mechansims for people-centric security.

If you already work using memory-safe programming languages, you are on the right track. If you don’t, we would be glad to tell you why companies like Jane Street, Tezos, Microsoft, Tarides, and Meta use OCaml to provide not only the best possible cybersecurity but also exceptional coding flexibility.

Don’t hesitate to contact us via sales@tarides.com for more information or with any questions you may have.

References

-

Report: Future of Memory Safety. https://advocacy.consumerreports.org/research/report-future-of-memory-safety/

-

NSA releases guidance on how to protect against software memory safety issues. https://www.nsa.gov/Press-Room/News-Highlights/Article/Article/3215760/nsa-releases-guidance-on-how-to-protect-against-software-memory-safety-issues/

-

The Federal Government is moving on memory safety for Cybersecurity. https://www.nextgov.com/cybersecurity/2022/12/federal-government-moving-memory-safety-cybersecurity/381275/

-

Memory Safety Convening Report 1.1. https://advocacy.consumerreports.org/wp-content/uploads/2023/01/Memory-Safety-Convening-Report-1-1.pdf

-

Chromium project memory safety. https://www.chromium.org/Home/chromium-security/memory-safety/

On indefinite truth values — Andrej Bauer, Aug 12, 2023

On indefinite truth values — Andrej Bauer, Aug 12, 2023

In a discussion following a MathOverflow answer by Joel Hamkins, Timothy Chow and I got into a chat about what it means for a statement to “not have a definite truth value”. I need a break from writing the paper on countable reals (coming soon in a journal near you), so I thought it would be worth writing up my view of the matter in a blog post.

How are we to understand the statement “the Riemann hypothesis (RH) does not have a definite truth value”?

Let me first address two possible…

Read more...In a discussion following a MathOverflow answer by Joel Hamkins, Timothy Chow and I got into a chat about what it means for a statement to “not have a definite truth value”. I need a break from writing the paper on countable reals (coming soon in a journal near you), so I thought it would be worth writing up my view of the matter in a blog post.

How are we to understand the statement “the Riemann hypothesis (RH) does not have a definite truth value”?

Let me first address two possible explanations that in my view have no merit.

First, one might suggest that “RH does not have a definite truth value” is the same as “RH is neither true nor false”. This is nonsense, because “RH is neither true nor false” is the statement $\neg \mathrm{RH} \land \neg\neg\mathrm{RH}$, which is just false by the law of non-contradiction. No discussion here, I hope. Anyone claiming “RH is neither true nor false” must therefore mean that they found a paradox.

Second, it is confusing and even harmful to drag into this discussion syntactically invalid, ill-formed, or otherwise corrupted statements. To say something like “$(x + ( - \leq 7$ has no definite truth value” is meaningless. The notion of truth value does not apply to arbitrary syntactic garbage. And even if one thinks this is a good idea, it does not apply to RH, which is a well-formed formula that can be assigned meaning.

Having disposed of ill-fated attempts, let us ask what the precise mathematical meaning of the statement might be. It is important to note that we are discussing semantics. The truth value of a sentence $P$ is an element $I(P) \in B$ of some Boolean algebra $(B, 0, 1, {\land}, {\lor}, {\lnot})$, assigned by an interpretation function $I$. (I am assuming classical logic, but nothing really changes if we switch to intuitionistic logic, just replace Boolean algebras with Heyting algebras.) Taking this into account, I can think of three ways of explaining “RH does not have a definite truth value”:

-

The truth value $I(\mathrm{RH})$ is neither $0$ nor $1$. (Do not confuse this meta-statement with the object-statement $\neg \mathrm{RH} \land \neg\neg\mathrm{RH}$.) Of course, for this to happen one has to use a Boolean algebra that contains something other than $0$ and $1$.

-

The truth value of $I(\mathrm{RH})$ varies, depending on the model and the interpretation function. An example of this phenomenon is the continuum hypothesis, which is true in some set-theoretic models and false in others.

-

The interpretation function $I$ fails to assign a truth value to $\mathrm{RH}$.

Assuming we have set up sound and complete semantics, the first and the second reading above both amount to undecidability of RH. Indeed, if the truth value of RH is not $1$ across all models then RH is not provable, and if it is not fixed at $0$ then it is not refutable, hence it is undecidable. Conversely, if RH is undecidable then its truth value in the Lindenbaum-Tarski algebra is neither $0$ nor $1$. We may quotient the algebra so that the value becomes true or false, as we wish.

The third option says that one has got a lousy interpretation function and should return to the drawing board.

In some discussions “RH does not have a definite truth value” seems to take on an anthropocentric component. The truth value is indefinite because knowledge of it is lacking, or because there is a cognitive barrier to comprehending the statement, etc. I find these just as unappealing as the Brouwerian counterexamples arguing in favor of intuitionistic logic.

The only realm in which I reasonably comprehend “$P$ does not have a definite truth value” is pre-mathematical, or even philosophical. It may be the case that $P$ refers to pre-mathematical concepts lacking precise formal description, or whose existing formal descriptions are considered problematic. This situation is similar to the third one above, but cannot be just dismissed as technical deficiency. An illustrative example is Solomon Feferman's Does mathematics need new axioms? and the discussion found therein on the meaningfulness and the truth value of the continuum hypothesis. (However, I am not aware of anyone seriously arguing that the mathematical meaning of Riemann hypothesis is contentious.)

So, what do I mean by “RH does not have a definite truth value”? Nothing, I would never say that and I do not understand what it is supposed to mean. RH clearly has a definite truth value, in each model, and with some luck we are going to find out which one. (To preempt a counter-argument: the notion of “standard model” is a mystical concept, while those stuck in an “intended model” suffer from lack of imagination.)

Hide

Kcas: Building a Lock-Free STM for OCaml (2/2) — Tarides, Aug 10, 2023

Kcas: Building a Lock-Free STM for OCaml (2/2) — Tarides, Aug 10, 2023

This is the follow-up post continuing the discussion of the development of Kcas. Part 1 discussed the development done on the library to improve performance and add a transaction mechanism that makes it easy to compose atomic operations without really adding more expressive power.

In this part we'll discuss adding a fundamentally new feature to Kcas that makes it into a proper STM implementation.

Get Busy Waiting

If shared memory locations and transactions over them essentially replace traditio…

Read more...This is the follow-up post continuing the discussion of the development of Kcas. Part 1 discussed the development done on the library to improve performance and add a transaction mechanism that makes it easy to compose atomic operations without really adding more expressive power.

In this part we'll discuss adding a fundamentally new feature to Kcas that makes it into a proper STM implementation.

Get Busy Waiting

If shared memory locations and transactions over them essentially replace traditional mutexes, then one might ask what replaces condition variables. It is very common in concurrent programming for threads to not just want to avoid stepping on each other's toes, or the I of ACID, but to actually prefer to follow in each other's footsteps. Or, to put it more technically, wait for events triggered or data provided by other threads.

Following the approach introduced in the paper

Composable Memory Transactions,

I implemented a retry mechanism that allows a transaction to essentially wait on

arbitrary conditions over the state of shared memory locations. A transaction

may simply raise an exception,

Retry.Later,

to signal to the commit mechanism that a transaction should only be retried

after another thread has made changes to the shared memory locations examined by

the transaction.

A trivial example would be to convert a non-blocking take on a queue to a blocking operation:

let take_blocking ~xt queue =

match Queue.Xt.take_opt ~xt queue with

| None -> Retry.later ()

| Some elem -> elemOf course, the

Queue

provided by kcas_data already has a blocking take which essentially results in the above

implementation.

Perhaps the main technical challenge in implementing a retry mechanism in multicore OCaml is that it should perform blocking in a scheduler friendly manner such that other fibers, as in Eio, or tasks, as in Domainslib, are not prevented from running on the domain while one of them is blocked. The difficulty with that is that each scheduler potentially has its own way for suspending a fiber or waiting for a task.

To solve this problem such that we can provide an updated and convenient blocking experience, we introduced a library that provides a domain-local-await mechanism, whose interface is inspired by Arthur Wendling's proposal for the Saturn library. The idea is simple. Schedulers like Eio and Domainslib install their own implementation of the blocking mechanism, stored in a domain local variable, and then libraries like Kcas can obtain the mechanism to block in a scheduler friendly manner. This allows blocking abstractions to not only work on one specific scheduler, but also allows blocking abstractions to work across different schedulers.

Another challenge is the desire to support both conjunctive and disjunctive combinations of transactions. As explained in the paper Composable Memory Transactions, this in turn requires support for nested transactions. Consider the following attempt at a conditional blocking take from a queue:

let non_nestable_take_if ~xt predicate queue =

let x = Queue.Xt.take_blocking ~xt queue in

if not (predicate x) then

Retry.later ();

xIf one were to try to use the above to take an element from the

first

of two queues

Xt.first [

non_nestable_take_if predicate queue_a;

non_nestable_take_if predicate queue_b;

]one would run into the following problem: while only a value that passes the predicate would be returned, an element might be taken from both queues.

To avoid this problem, we need a way to roll back changes recorded by a transaction attempt. The way Kcas supports this is via an explicit scoping mechanism. Here is a working (nestable) version of conditional blocking take:

let take_if ~xt predicate queue =

let snap = Xt.snapshot ~xt in

let x = Queue.Xt.take_blocking ~xt queue in

if not (predicate x) then

Retry.later (Xt.rollback ~xt snap);

xFirst a

snapshot

of the transaction log is taken and then, in case the predicate is not

satisfied, a

rollback

to the snapshot is performed before signaling a retry. The obvious disadvantage of

this kind of explicit approach is that it requires more care from the

programmer. The advantage is that it allows the programmer to explicitly scope

nested transactions and perform rollbacks only when necessary and in a more

fine-tuned manner, which can allow for better performance.

With properly nestable transactions one can express both conjunctive and disjunctive compositions of conditional transactions.

As an aside, having talked about the splay tree a few times in my previous post, I should mention that the implementation of the rollback operation using the splay tree also worked out surprisingly nicely. In the general case, a rollback may have an effect on all accesses to shared memory locations recorded in a transaction log. This means that, in order to support rollback, worst case linear time cost in the number of locations accessed seems to be the minimum — no matter how transactions might be implemented. A single operation on a splay tree may already take linear time, but it is also possible to take advantage of the tree structure and sharing of the immutable spine of splay trees and stop early as soon as the snapshot and the log being rolled back are the same.

Will They Come

Blocking or retrying a transaction indefinitely is often not acceptable. The transaction mechanism with blocking is actually already powerful enough to support timeouts, because a transaction will be retried after any location accessed by the transaction has been modified. So, to have timeouts, one could create a location, make it so that it is changed when the timeout expires, and read that location in the transaction to determine whether the timeout has expired.

Creating, checking, and also cancelling timeouts manually can be a lot of work.

For this reason Kcas was also extended with direct support for timeouts. To

perform a transaction with a timeout one can simply explicitly specify a

timeoutf in seconds:

let try_take_in ~seconds queue =

Xt.commit ~timeoutf:seconds { tx = Queue.Xt.take_blocking queue }Internally Kcas uses the domain-local-timeout library for timeouts. The OCaml standard library doesn't directly provide a timeout mechanism, but it is a typical service provided by concurrent schedulers. Just like with the previously mentioned domain local await, the idea with domain local timeout is to allow libraries like Kcas to tap into the native mechanism of whatever scheduler is currently in use and to do so conveniently without pervasive parameterisation. More generally this should allow libraries like Kcas to be scheduler agnostic and help to avoid duplication of effort.

Hollow Man

Let's recall the features of Kcas transactions briefly.

First of all, passing the transaction ~xt through the computation allows

sequential composition of transactions:

let bind ~xt a b =

let x = a ~xt in

b ~xt xThis also gives conjunctive composition as a trivial consequence:

let pair ~xt a b =

(a ~xt, b ~xt)Nesting, via

snapshot

and

rollback,

allows conditional composition:

let if_else ~xt predicate a b =

let snap = Xt.snapshot ~xt in

let x = a ~xt in

if predicate x then

x

else begin

Xt.rollback ~xt snap;

b ~xt

endNesting combined with blocking, via the

Retry.Later

exception, allows disjunctive composition

let or_else ~xt a b =

let snap = Xt.snapshot ~xt in

match a ~xt with

| x -> x

| exception Retry.Later ->

Xt.rollback ~xt snap;

b ~xtof blocking transactions, which is also supported via the

first

combinator.

What is Missing?

The limits of my language mean the limits of my world. — Ludwig Wittgenstein

The main limitation of transactions is that they are invisible to each other. A transaction does not directly modify any shared memory locations and, once it does, the modifications appear as atomic to other transactions and outside observers.

The mutual invisibility means that

rendezvous between two

(or more) threads cannot be expressed as a pair of composable transactions. For

example, it is not possible to implement synchronous message passing as can be

found e.g. in

Concurrent ML,

Go, and various other languages and libraries, including zero

capacity Eio

Streams,

as simple transactions with a signature such as follows:

module type Channel = sig

type 'a t

module Xt : sig

val give : xt:'x Xt.t -> 'a t -> 'a -> unit

val take : xt:'x Xt.t -> 'a t -> 'a

end

endLanguages such as Concurrent ML and Go allow disjunctive composition of such synchronous message passing operations and some other libraries even allow conjunctive, e.g. CHP, or even sequential composition, e.g. TE and Reagents, of such message passing operations.

Although the above Channel signature is unimplementable, it does not mean that

one could not implement a non-compositional Channel

module type Channel = sig

type 'a t

val give : 'a t -> 'a -> unit

val take : 'a t -> 'a

endor implement a compositional message passing model that allows such operations to be composed. Indeed, both the CHP and TE libraries were implemented on top of Software Transactional Memory with the same fundamental invisibility of transactions. In other words, it is possible to build a new composition mechanism, distinct from transactions, by using transactions. To allow such synchronisation between threads requires committing multiple transactions.

Torn Reads

The k-CAS-n-CMP algorithm underlying Kcas ensures that it is not possible to read uncommitted changes to shared memory locations and that an operation can only commit successfully after all of the accesses taken together have been atomic, i.e. strictly serialisable or both linearisable and serialisable in database terminology. These are very strong guarantees and make it much easier to implement correct concurrent algorithms.

Unfortunately, the k-CAS-n-CMP algorithm does not prevent one specific concurrency anomaly. When a transaction reads multiple locations, it is possible for the transaction to observe an inconsistent state when other transactions commit changes between reads of different locations. This is traditionally called read skew in database terminology. Having observed such an inconsistent state, a Kcas transaction cannot succeed and must be retried.

Even though a transaction must retry after having observed read skew, unless taken into account, read skew can still cause serious problems. Consider, for example, the following transaction:

let unsafe_subscript ~xt array index =

let a = Xt.get ~xt array in

let i = Xt.get ~xt index in

a.(i)The assumption is that the array and index locations are always updated

atomically such that the subscript operation should be safe. Unfortunately due

to read skew the array and index might not match and the subscript operation

could result in an "index out of bounds" exception.

Even more subtle problems are possible. For example, a balanced binary search

tree implementation using

rotations can, due to read skew,

be seen to have a cycle. Consider the below diagram. Assume that a lookup for

node 2 has just read the link from node 3 to node 1. At that point another

transaction commits a rotation that makes node 3 a child of node 1. As the

lookup reads the link from node 1 it leads back to node 3 creating a cycle.

There are several ways to deal with these problems. It is, of course, possible to use ad hoc techniques, like checking invariants manually, within transactions. The Kcas library itself addresses these problems in a couple of ways.

First of all, Kcas performs periodic validation of the entire transaction log

when an access, such as get or set, of a shared memory location is made

through the transaction log. It would take quadratic time to validate the entire

log on every access. To avoid changing the time complexity of transactions, the

number of accesses between validations is doubled after each validation.

Periodic validation is an effective way to make loops that access shared memory

locations, such as the lookup of a key from a binary search tree, resistant

against read skew. Such loops will eventually be aborted on some access and will

then be retried. Periodic validation is not effective against problems that

might occur due to non-transactional operations made after reading inconsistent

state. For those cases an explicit

validate

operation is provided that can be used to validate that the accesses of

particular locations have been atomic:

let subscript ~xt array index =

let a = Xt.get ~xt array in

let i = Xt.get ~xt index in

(* Validate accesses after making them: *)

Xt.validate ~xt index;

Xt.validate ~xt array;

a.(i)It is entirely fair to ask whether it is acceptable for an STM mechanism to allow read skew. A candidate correctness criterion for transactional memory called "opacity", introduced in the paper On the correctness of transactional memory, does not allow it. The trade-off is that the known software techniques to provide opacity tend to introduce a global sequential bottleneck, such as a global transaction version number accessed by every transaction, that can and will limit scalability especially when transactions are relatively short, which is usually the case.

At the time of writing this there are several STM implementations that do not provide opacity. The current Haskell STM implementation, for example, introduced in 2005, allows similar read skew. In Haskell, however, STM is implemented at the runtime level and transactions are guaranteed to be pure by the type system. This allows the Haskell STM runtime to validate transactions when switching threads. Nevertheless there have been experiments to replace the Haskell STM using algorithms that provide opacity as described in the paper Revisiting software transactional memory in Haskell, for example. The Scala ZIO STM also allows read skew. In his talk Transactional Memory in Practice, Brett Hall describes their experience in using a STM in C++ that also allows read skew.

It is not entirely clear how problematic it is to have to account for the possibility of read skew. Although I expect to see read skew issues in the future, the relative success of the Haskell STM would seem to suggest that it is not necessarily a show stopper. While advanced data structure implementations tend to have intricate invariants and include loops, compositions of transactions using such data structures, like the LRU cache implementation, tend to be loopless and relatively free of such invariants and work well.

Tomorrow May Come

At the time of writing this, the kcas and kcas_data packages are still

marked experimental, but are very close to being labeled 1.0.0. The core Kcas

library itself is more or less feature complete. The Kcas data library, by its

nature, could acquire new data structure implementations over time, but there is

one important feature missing from Kcas data — a bounded queue.

It is, of course, possible to simply compose a transaction that checks the length of a queue. Unfortunately that would not perform optimally, because computing the exact length of a queue unavoidably requires synchronisation between readers and writers. A bounded queue implementation doesn't usually need to know the exact length — it only needs to have a conservative approximation of whether there is room in the queue and then the computation of the exact length can be avoided much of the time. Ideally the default queue implementation would allow an optional capacity to the specified. The challenge is to implement the queue without making it any slower in the unbounded case.

Less importantly the Kcas data library currently does not provide an ordered map nor a priority queue. Those serve use cases that are not covered by the current selection of data structures. For an ordered map something like a WAVL tree could be a good starting point for a reasonably scalable implementation. A priority queue, on the other hand, is more difficult to scale, because the top element of a priority queue might need to be examined or even change on every mutation, which makes it a sequential bottleneck. On the other hand, updating elements far from the top shouldn't require much synchronisation. Some sort of two level scheme like a priority queue of per domain priority queues might provide best of both worlds.

But Why?

If you look at a typical textbook on concurrent programming it will likely tell you that the essence of concurrent programming boils down to two (or three) things:

- independent sequential threads of control, and

- mechanisms for threads to communicate and synchronise.

The first bullet on that list has received a lot of focus in the form of libraries like Eio and Domainslib that utilise OCaml's support for algebraic effects. Indeed, the second bullet is kind of meaningless unless you have threads. However, that does not make it less important.

Programming with threads is all about how threads communicate and synchronise with each other.

A survey of concurrent programming techniques could easily fill an entire book, but if you look at most typical programming languages, they provide you with a plethora of communication and synchronisation primitives such as

- atomic operations,

- spin locks,

- barriers and count down latches,

- semaphores,

- mutexes and condition variables,

- message queues,

- other concurrent collections,

- and more.

The main difficulty with these traditional primitives is their relative lack of composability. Every concurrency problem becomes a puzzle whose solution is some ad hoc combination of these primitives. For example, given a concurrent thread safe stack and a queue it may be impossible to atomically move an element from the stack to the queue without wrapping both behind some synchronisation mechanism, which also likely reduces scalability.

There are also some languages based on asynchronous message passing with the ability to receive multiple messages selectively using both conjunctive and disjunctive patterns. A few languages are based on rendezvous or synchronous message passing and offer the ability to disjunctively and sometimes also conjunctively select between potential communications. I see these as fundamentally different from the traditional primitives as the number of building blocks is much smaller and the whole is more like unified language for solving concurrency problems rather than just a grab bag of non-composable primitives. My observation, however, has been that these kind of message passing models are not familiar to most programmers and can be challenging to program with.

As an aside, why should one care about composability? Why would anyone care about being able to e.g. disjunctively either pop an element from a stack or take an element from a queue, but not both, atomically? Well, it is not about stacks and queues, those are just examples. It is about modularity and scalability. Being able to, in general, understand independently developed concurrent abstractions on their own and to also combine them to form effective and efficient solutions to new problems.

Another approach to concurrent programming is transactions over mutable data structures whether in the form of databases or Software Transactional Memory (STM). Transactional databases, in particular, have definitely proven to be a major enabler. STM hasn't yet had a similar impact. There are probably many reasons for that. One probable reason is that many languages already offered a selection of familiar traditional primitives and millions of lines of code using those before getting STM. Another reason might be that attempts to provide STM in a form where one could just wrap any code inside an atomic block and have it work perfectly proved to be unsuccessful. This resulted in many publications and blog posts, e.g. A (brief) retrospective on transactional memory, discussing the problems resulting from such doomed attempts and likely contributed to making STM seem less desirable.

However, STM is not without some success. More modest, and more successful, approaches either strictly limit what can be performed atomically or require the programmer to understand the limits and program accordingly. While not a panacea, STM provides both composability and a relatively simple and familiar programming model based on mutable shared memory locations.

Crossroads

Having just recently acquired the ability to have multiple domains running in parallel, OCaml is in a unique position. Instead of having a long history of concurrent multicore programming we can start afresh.

What sort of model of concurrent programming should OCaml offer?

One possible road for OCaml to take would be to offer STM as the go-to approach for solving most concurrent programming problems.

Until Next Time

I've had a lot of fun working on Kcas. I'd like to thank my colleagues for putting up with my obsession to work on it. I also hope that people will find Kcas and find it useful or learn something from it!

Hide

Kcas: Building a Lock-Free STM for OCaml (1/2) — Tarides, Aug 07, 2023

Kcas: Building a Lock-Free STM for OCaml (1/2) — Tarides, Aug 07, 2023

In the past few months I've had the pleasure of working on the Kcas library. In this and a follow-up post, I will discuss the history and more recent development process of optimising Kcas and turning it into a proper Software Transactional Memory (STM) implementation for OCaml.

While this is not meant to serve as an introduction to programming with Kcas, along the way we will be looking at a few code snippets. To ensure that they are type correct — the best kind of correct* — I'll use the M…

Read more...In the past few months I've had the pleasure of working on the Kcas library. In this and a follow-up post, I will discuss the history and more recent development process of optimising Kcas and turning it into a proper Software Transactional Memory (STM) implementation for OCaml.

While this is not meant to serve as an introduction to programming with Kcas, along the way we will be looking at a few code snippets. To ensure that they are type correct — the best kind of correct* — I'll use the MDX tool to test them. So, before we continue, let's require the libraries that we will be using:

# #require "kcas"

# open Kcas

# #require "kcas_data"

# open Kcas_dataAll right, let us begin!

Origins

Contrary to popular belief, the name "Kcas" might not be an abbreviation of KC and Sadiq. Sadiq once joked "I like that we named the library after KC too." — two early contributors to the library. The Kcas library was originally developed for the purpose of implementing Reagents for OCaml and is an implementation of multi-word compare-and-set, often abbreviated as MCAS, CASN, or — wait for it — k-CAS.

But what is this multi-word compare-and-set?